-

Apexlink

Real Estate

-

DLS

General Insurance

-

DMV

Government

-

Entiger

Fintech

-

GIS Mapping

Gas & Petroleum

-

HMS

Employee Benefit

-

HAWA

Government

-

Harley

Community

-

IHG

Hotel & Tourism

-

Sparkseeker

Humane Tech

-

Track Ninja

Sports

-

Response Vision

Disaster Management

- Artificial Intelligence

- Application Services

- Automation Services

- Cyber Security

- Chatbot Experts

- Data Analysis

- Data Warehouse Services

- Machine Learning

- Digital Commerce Services

- Digital Transformation

- Infrastructure Service

- IT Support

- IT Consulting

- IT Outsourcing

- IOS Development

- Android Development

-

Cross Platform Development

-

Gaming App Development

A novel strategy known as RAG in AI is garnering a lot of interest in the quickly developing field of artificial intelligence. Retrieval Augmented creation (RAG), which provides a potent blend of language creation and knowledge retrieval capabilities, is transforming the way artificial intelligence (AI) systems process and generate information. This novel approach affects a range of AI applications, improving their functionality and increasing their capacity to address challenging issues.

As you read this article further, you'll explore the core components of RAG systems and understand how they differ from traditional AI models. You'll gain insights into the mechanics of Retrieval Augmented Generation and discover its wide-ranging applications in artificial intelligence services. In addition to that, you'll learn about the key benefits of implementing RAG in AI development, including improved accuracy, enhanced context understanding, & increased transparency. This exploration will shed light on how RAG is shaping the future of AI and its potential to transform various industries.

Understanding Retrieval Augmented Generation

Retrieval Augmented Generation is a natural language processing technique that integrates the strengths of retrieval-based and generative-based AI models. RAG AI delivers accurate results by leveraging pre-existing knowledge while processing & consolidating that knowledge to generate unique, context-aware responses in human-like language.

Definition of RAG

RAG is a superset of generative AI, combining its capabilities with retrieval AI. Unlike cognitive AI, which mimics human brain processes, RAG works by integrating retrieval techniques with generative AI models.

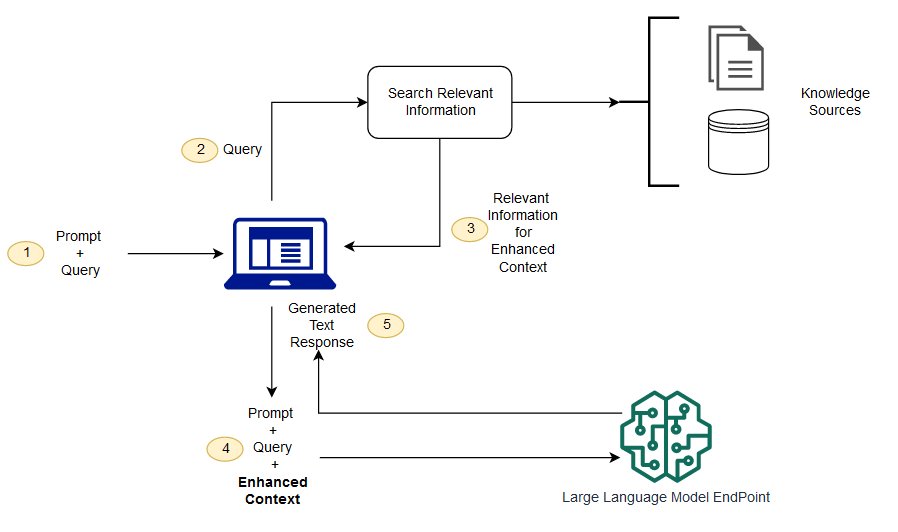

How RAG works

- A retrieval model extracts relevant information from existing sources like articles, databases, and knowledge repositories.

- The generative model synthesizes the retrieved data and shapes it into a coherent, contextually appropriate response.

- By combining the strengths of both models, RAG minimizes their individual drawbacks.

| RAG Model Characteristics | Benefits |

| Context understanding | Generates fresh, unique replies |

| Accuracy | Delivers more accurate responses |

| Relevance | Provides relevant information |

| Originality | Produces original content |

What is RAG in AI?

RAG in AI is revolutionizing how AI systems process and generate information. It offers a powerful combination of knowledge retrieval and language generation capabilities, enhancing the performance of various AI applications and expanding their potential to solve complex problems.

Core Components of RAG Systems

RAG systems have three core components that work together to enhance the capabilities of generative AI models:

Retrieval mechanism

The retrieval mechanism extracts relevant information from external sources like databases, knowledge bases, and web pages. It uses powerful search algorithms to query this data, which then undergoes pre-processing steps such as tokenization, stemming, and stop word removal.

| Retrieval Mechanism Steps | Description |

| Data Retrieval | Querying external sources for relevant information |

| Pre-processing | Tokenization, stemming, stop word removal |

Augmentation process

The augmentation process seamlessly incorporates the pre-processed retrieved information into the pre-trained LLM. This integration provides the LLM with a more comprehensive understanding of the topic, enhancing its context.

Generation model

The augmented context enables the LLM to generate more precise, informative, and engaging responses. RAG leverages vector databases, which store data in a way that facilitates efficient search and retrieval based on semantic similarity.

By combining these components, RAG systems can access up-to-date information, ensure factual grounding, provide contextual relevance, and improve response accuracy. This makes RAG particularly valuable for applications where accuracy is paramount, such as customer service or scientific writing.

RAG Applications in AI

Retrieval Augmented Generation has a wide range of applications in AI, revolutionizing various industries and domains. Here are some notable areas where RAG is making a significant impact:

AI-Powered Risk Management Dashboards

RAG enables the development of AI-powered risk management dashboards that provide real-time insights and recommendations for improving supply chain performance. These dashboards help stakeholders quickly grasp performance levels and identify areas needing attention.

Predictive Maintenance Systems

RAG is used in predictive maintenance systems to examine equipment performance data and identify probable issues before they occur. It can help firms optimize maintenance schedules, decrease downtime, & improve overall operational efficiency.

Supply Chain Optimization

RAG plays a crucial role in supply chain optimization by facilitating scenario analysis and sensitivity testing. It helps decision-makers strategically optimize supply chain strategies and align them with organizational objectives, leading to cost savings and improved effectiveness.

Fraud Detection and Prevention

RAG enhances fraud detection and prevention capabilities by analyzing vast amounts of financial data swiftly and accurately. It identifies anomalies and patterns indicative of fraudulent activities, enabling organizations to take proactive measures to mitigate risks.

| Application | Benefit |

| AI-Powered Risk Management Dashboards | Real-time insights and recommendations |

| Predictive Maintenance Systems | Reduced downtime and improved efficiency |

| Supply Chain Optimization | Cost savings and improved effectiveness |

| Fraud Detection and Prevention | Proactive risk mitigation |

RAG's applications extend to customer experience management, healthcare diagnostics, project management, financial analysis, search engines, legal research, and semantic comprehension in document analysis. Its ability to retrieve relevant information and generate contextually appropriate responses makes it a powerful tool across various domains.

RAG vs Traditional AI Models

RAG models differ in size, complexity, and functionalities from conventional natural language processing (NLP) models. RAG models learn directly from large volumes of text data through unsupervised pre-training, which enables them to generalize over a wide range of language tasks without task-specific fine-tuning. This is in contrast to standard NLP models, which mostly rely on handcrafted features or rule-based systems.

Comparison with standard LLMs

Large Language Models (LLMs) are designed to understand, generate, and manipulate human-like text. The complexity and size of LLMs make them highly capable in understanding the context, nuances, and subtleties of human language. RAG models offer high scalability and adaptability compared to traditional models. Unlike traditional models that require extensive retraining to incorporate new information, RAG models can be easily updated with new knowledge sources.

Advantages of RAG in information retrieval

RAG models address the challenge of data sparsity in training by leveraging external knowledge sources. By retrieving relevant documents during the generation process, these models can fill in gaps in their training datasets, enabling them to handle a wider range of queries with greater accuracy and confidence.

| Feature | Retrieval Augmented Generation (RAG) | Traditional NLP Models |

| Approach | Combines retrieval of relevant documents with text generation | Rule-based or statistical models designed for specific tasks |

| Data Dependency | Relies on external data sources for document retrieval | Depends on handcrafted features and predefined rules |

| Flexibility | Can handle a wide range of queries by retrieving different documents | Limited to the patterns learned from the provided examples |

Use cases where RAG outperforms

RAG models are particularly effective for tasks that require specific information from a large corpus, such as question answering, information extraction, and summarization. By leveraging external data sources, RAG can provide detailed and contextually rich responses, outperforming traditional models in these use cases.

The Mechanics of Retrieval Augmented Generation

Retrieval Augmented Generation (RAG) systems leverage vector databases to efficiently store and retrieve relevant information. The RAG process involves embedding and indexing data, followed by query processing and retrieval.

Vector Databases in RAG

Vector databases play a crucial role in RAG by providing efficient storage and retrieval of high-dimensional vectors. They optimize indexing techniques for fast similarity searches and scale effectively with massive datasets. Vector databases offer superior performance compared to traditional relational databases when handling complex vector data.

Embedding and Indexing

Embedding transforms raw data into dense vector representations that capture semantic meaning. Indexing organizes these vectors for quick retrieval. Techniques like dimensionality reduction and clustering narrow down the search scope, improving efficiency.

| Technique | Description |

| k-Nearest Neighbors (KNN) | Retrieves k most similar vectors using brute-force |

| Inverted File (IVF) | Partitions vector space into clusters for fast search |

| Locality-Sensitive Hashing (LSH) | Maps similar vectors to the same hash buckets |

Query Processing and Retrieval

During query processing, the user's input is converted into a vector representation. The RAG system then searches the indexed vector database to find the most relevant information. Advanced retrieval algorithms like Hierarchical Navigable Small World (HNSW) offer a balance between speed and accuracy.

RAG systems face challenges such as data quality, high dimensionality, and limited evaluation metrics. Addressing these challenges is crucial for building robust and effective RAG applications.

Benefits of RAG in AI Applications

RAG offers several key benefits that enhance AI applications:

Enhanced accuracy and relevance

By grounding responses in up-to-date, domain-specific data, RAG significantly improves the accuracy and relevance of AI-generated outputs. This leads to more reliable and trustworthy AI applications across various industries.

Improved contextual understanding

RAG enables AI systems to better understand the context of user queries by retrieving relevant information from external knowledge bases. This contextual understanding allows for more nuanced and appropriate responses.

Up-to-date information retrieval

RAG ensures that AI applications have access to the latest information by retrieving data from continuously updated sources. This keeps the AI's knowledge current and prevents outdated or irrelevant responses.

Reduced hallucinations

Hallucinations, or AI-generated outputs that deviate from factual information, are a common issue in AI systems. RAG mitigates this problem by anchoring responses in retrieved data, reducing the likelihood of false or nonsensical outputs.

Scalability and efficiency

RAG architectures can efficiently handle large-scale data retrieval and generation tasks. By leveraging advanced indexing and retrieval techniques, RAG systems can quickly access relevant information from vast knowledge bases, enabling scalable AI applications.

Conclusion

The AI environment is significantly altered by retrieval augmented generation, which modifies how AI systems produce and process information. RAG guarantees current information retrieval, boosts accuracy, and improves context understanding by fusing the advantages of generative and retrieval-based models. These developments result in more dependable and effective AI applications in a variety of sectors, including risk management & fraud detection.

RAG stands out as a game-changer as AI develops further because it provides answers to persistent problems in natural language processing. Developers and enterprises can benefit from its capacity to preserve flexibility while providing replies based on accurate facts. Explore our AI development services to learn more about how RAG can revolutionize your AI initiatives. With RAG at the vanguard, the future of AI appears bright, with the promise of more trustworthy, intelligent, & context-aware AI systems that can solve challenging real-world issues with never-before-seen efficiency.

Frequently Asked Questions

What is the RAG approach in AI?

Retrieval-Augmented Generation is a technique that combines information retrieval with generative AI models. The technique results in more accurate and contextually relevant responses by retrieving relevant documents and using them to inform the generation process.

What is LLM and RAG?

LLM is a type of AI model that is trained on massive chunks of text data to generate human-like text. On the other hand, RAG is a method that enhances the capabilities of an LLM by retrieving relevant information from external sources before generating responses.

What is the difference between RAG and generative AI?

While generative AI solely relies on pre-trained models to generate text based on input, RAG enhances generate AI by incorporating external information retrieval for more informed and accurate responses.

What are the three paradigms of RAG?

The three paradigms of RAG are retrieval, generation, and ranking.

What is RAG in GenAI?

In GenAI, RAG is often used to enhance the quality of responses. It does so by retrieving external data that is more accurate, up-to-date, and contextually relevant.

Can a RAG cite references for the data it retrieves?

Yes, RAG can cite references to provide sources to the retrieved information in the generated response.

Artificial Intelligence

Artificial Intelligence

Blockchain

Blockchain Cloud Computing

Cloud Computing Infrastructure

Services

Infrastructure

Services Metaverse

Metaverse QA

Automation

QA

Automation UI/UX

UI/UX